Scott Galloway Explains Why Age Gating Social Media Is Both Necessary and Doable

The companies can do it, but they're incentivized to fight it

Introduction from Jon Haidt:

Why do modern societies impose minimum legal ages for many activities and products, such as drinking, smoking, gambling, porn, driving, skydiving, or watching R or X-rated movies? There are many reasons, but these four stand out for this list of activities:

Graphic sexuality

Graphic violence

Addiction risk

Significant health/safety hazards

For these kinds of activities, we give adults great leeway to make their own decisions. They have mature prefrontal cortices; they are better than children at inhibiting impulses and evaluating risks. When it comes to children, however, there is a broad consensus that companies who profit from children by exposing them to any of these four harms must stop. We understand that these companies—which typically place the interests of their shareholders above those of any other stakeholder—must be strongly incentivized to enforce minimum age requirements by the threat of lawsuits, fines, and penalties. Unless they are social media companies.

When used normally, social media exposes children to all four of these harms, often without the knowledge or consent of the child’s parents. Once children get their own smartphones or tablets and social media accounts, they are likely to begin spending five hours a day on these platforms, on average, which means 35 hours per week, roughly a full time job’s worth of work producing and consuming content for the platforms. During those long scrolling hours, many will be exposed to pornographic images, videos urging them to undertake dangerous challenges, and videos of violence committed by real people against real people. Something on the order of 10% will develop “problematic use” which sure looks like other behavioral addictions, such as gambling. Their risk of anxiety, depression, and self-harm will increase substantially, especially if they are girls. Their risk of falling prey to sextortion (and therefore suicide) will increase, especially if they are boys.

On November 29, the Australian government took the boldest move yet by any nation in the long struggle to protect children from these harms by changing the incentives within which the companies operate. The new law sets a minimum age of 16 for opening accounts (effectively raising the age from 13). The law will take effect in 12 months' time, during which the government will work with platforms to determine the best way to do age assurance. Penalties will be imposed on platforms, not parents or children.

The move by the Australian government is very popular with Australians. Most people understand that children are different from adults and that minimum ages are appropriate for many activities. The main objections to the bill, from what I have read, revolve around privacy concerns, particularly the idea that the new law will require people to give their private identity documents to social media companies. But this is not true. The bill was amended in the Australian Senate last week to address this concern by explicitly stating that no company can offer only the use of a government ID; they have to offer other options (and there are many other options).

The most eloquent statement on the urgency of implementing minimum ages for social media was made by my friend and NYU-Stern colleague Scott Galloway in an essay he wrote last June on his blog No Mercy / No Malice. With his multiple podcasts (Prof G, and Pivot) and his many books about tech, finance, and society, Scott knows far more about the tech industry than I do. He also writes with more moral force, emotion, and humor than I do. Scott has two teenage sons, and he cares and speaks a great deal about the threats to boys, as well as to girls, in the digital age.1

So when Australia passed its landmark social media legislation and some critics said it was not needed or not possible, I asked Scott if I could bring his powerful essay to the readership at After Babel. Here it is. I believe it will increase the confidence of legislators and citizens in Australia, that they are doing the right thing. I hope it will inspire leaders, legislators, and citizens around the world to take collective action in their own countries.

– Jon Haidt

Age Gating

By Scott Galloway

Social media is unprecedented in its reach and addictive potential — a bottomless dopa bag that fits in your pocket. For kids it poses heightened risks. The evidence is overwhelming and has been for a while. It just took a beat to absorb how brazen the lies were — “We’re proud of our progress.” Social media can be dangerous. That doesn’t make it net “bad” — there’s plenty of good things about it. But similar to automobiles, alcohol, and AK-47s, it has a mixed impact on our lives. It presents dangers. And one of the things a healthy society does is limit the availability of dangerous products to children, who lack the capacity to use them safely. Yet, two decades into the social media era, we permit unlimited, all-ages access to this dangerous, addictive product. The reason: incentives. Specifically, the platforms, dis-incentivized to age-gate their products, throw sand in the gears of any effort to limit access. To change the outcome, we must change the incentives.

Adult Swim

I’m a better person when I drink, more interesting and more interested. One of the reasons I work out so much is so I can continue to drink — muscle absorbs alcohol better than fat does. Kids are different, and we’ve long been comfortable treating them differently. In 1838, Wisconsin forbid the selling of liquor to minors without parental consent, and, by 1900, state laws setting minimum drinking ages were common. There’s a good case to be made that the U.S. alcohol limit of 21 is too high, but nobody would argue we should dispense with age-gating booze altogether.

That trend has paralleled laws restricting childhood access to other things. The right to bear arms is enshrined in the Constitution, yet courts don’t blink at keeping guns out of the hands of children, even as they dismantle every other limitation on gun ownership. If there’s a lobbying group trying to give driver’s licenses to 13-year-olds, I can’t find it. Age of consent laws make sex with children a crime; minors are not permitted to enter into contracts; we limit the hours and conditions in which they can work; they cannot serve in the military or on juries, nor are they allowed to vote. (That last one we may want to reconsider.) These are not trivial things. On the contrary, we exclude children from or substantially limit their participation in many core activities of society.

Late Night With the Dawg

The only time I have appeared on late-night TV was when Jimmy Fallon mocked me — showing a CNN video clip where I said “I’d rather give my 16-year-old a bottle of Jack and weed than Instagram.” Four thousand likes and 265,000 views later, it appears America agrees. My now almost 17-year-old son has engaged with all three substances. Alcohol and Instagram make him feel worse afterward — not sure about weed. However, he is restricted from carrying a bottle of Jack in his pocket, and his parents would ask for a word if his face was hermetically sealed to a bong. Note: Spare me any bullshit parenting advice from nonparents or therapists whose kids don’t come home for the holidays.

He, we, and society restrict his access to these substances. And, when he abstains from drinking/smoking, he isn’t sequestered from all social contact and the connective tissue of his peer group. We freaked out when we found (as you will if you have boys) porn on one of his devices. But the research is clear: We should be more alarmed when we find Instagram/Snap/TikTok on his phone. Mark Zuckerberg and Sheryl Sandberg are the pornographers of our global economy. Actually, that’s unfair to pornographers.

Peer Pressure

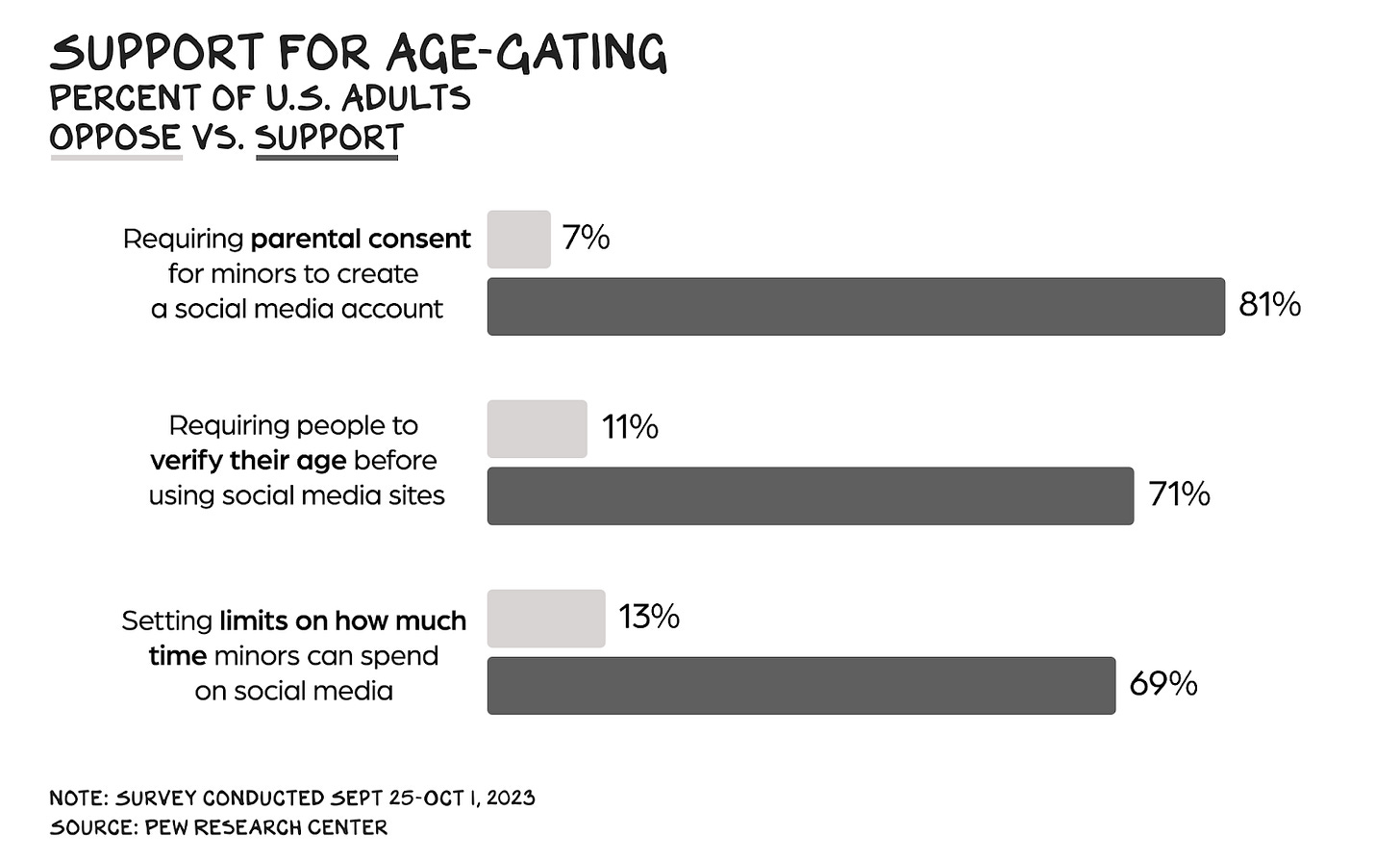

Age-gating social media is hugely popular. Over 80% of adults believe parental consent should be required for social media, and almost 70% want platforms to limit the time minors spend on them.

Those numbers are from last fall, before my NYU colleague Jonathan Haidt published The Anxious Generation, which builds on the work of Jean Twenge and others, making the most forceful case yet that social media is hurting our children. Reviewing the shocking increase in depression, self-harm, and general suffering our children are experiencing and the explanations offered by the platform apologists, Professor Haidt highlights the twin specters of social media and mobile devices and the lasting damage they’re doing to a generation. Unconstrained smartphone use, Haidt observes, has been “the largest uncontrolled experiment humanity has ever performed on its own children.” And the results are in.

Legislatures are responding. States from California to Utah to Louisiana have passed laws that limit access to social media based on age. If you haven’t noticed any change in the behavior of the platforms, however, that’s because courts have blocked nearly all of them. A social media and digital commerce trade group called NetChoice is quick to sue any state that interferes with its members’ ability to exploit children for maximum profit.

“Complicated”

Judges are siding with the platforms, and probably not because they enjoy seeing depressed teenagers fed content glorifying self-harm, or teenage boys committing suicide after being sextorted. The platforms and other opponents of these laws, such as the ACLU, make two main points: First, they claim that verifying age online is too complicated, requiring the collection of all sorts of information about users, and won’t work in all cases. Second, requiring users to collect this information creates free speech, privacy, and security concerns. (The platforms also deny their products are harmful to children.)

On their face, these points are valid. It is more difficult to confirm age online, where there’s no clerk at the counter who can ask to see your driver’s license and reference your face. And these platforms have proven reckless with personal data. It’s sort of a “they’re so irresponsible, but we can’t take action” dilemma.

But these objections are not about age verification, children’s rights, free speech, or privacy. They are concerns about the platform companies’ capabilities. Their arguments boil down to the assertion that these multibillion-dollar organizations, who’ve assembled vast pools of human capital that wield godlike technology, can’t figure out how to build effective, efficient, constitutionally compliant age-verification systems to protect children. If this sounds like bullshit, trust your instincts.

Illusion of Complexity

This isn’t a conversation re the realm of the possible, but the profitable. When you pay an industry not to understand something, it will never figure it out. (Just look at the tobacco industry’s inability to see a link with cancer.) What’s more challenging, figuring out if someone is younger than 16, or building a global real-time communication network that stores a near-infinite amount of text, video, and audio retrievable by billions of simultaneous users in milliseconds with 24/7 uptime? The social media giants know where you are, what you’re doing, how you’re feeling, and if you’re experiencing suicidal ideation … but they can’t figure out your age. You can’t make this shit up.

The platforms could design technology that reliably collects sufficient information to confirm a user’s age, then wipes the information from its servers. They could create a private or public entity that processes age verification anonymously. Remember the blockchain? Isn’t this exactly the kind of problem it was supposed to solve? They could deploy AI to estimate when a user is likely underage based on their online behaviors, and seek age verification from at-risk people. If device manufacturers (or just the device OS duopoly of Apple and Alphabet) were properly incentivized, they could implement age verification on the device itself. (This is what Meta says it wants, when it isn’t fighting age-verification requirements.) Or, crazy idea, they could stop glorifying suicide and pushing pornography to everyone.

The reason Zuck and the other Axis powers haven’t built age verification into their platforms is it will reduce their profits (because they will serve fewer ads to kids), which will suppress their stock prices, and the job of a public company CEO is to increase the stock price. Period, full stop, end of strategic plan. So long as the negative impact to the stock price caused by the bad PR of teen suicide and depression is less than the positive impact of the incremental ad revenue obtained through unrestricted algorithmic manipulation of those teens, the rational, shareholder-driven thing to do is fight age-verification requirements.

Flip the Script

If we want the platforms to make their products safe for children, we need to change the incentives — force them to bear the cost of their damage. Internalize the externalities, in economist-speak. There are three forces powerful enough to do this: the market, plaintiff lawyers, and the government. The market solution would be to let consumers decide if they want to be exploited and manipulated. And by consumers, I mean “teenagers.” One big shortcoming of this approach is that teenagers are idiots. I have proof here, as I’m raising two and used to be one. My job as their dad is to be their prefrontal cortex until it shows up. I told my son on a Thursday it was Thursday, and he disagreed.

The next approach is to let the platforms do whatever they want, but if they harm someone, let that person sue them for damages. This is how we police product safety in almost all contexts. Did your car’s air bag explode shrapnel into your neck? Sue Takata. Did talcum powder give you cancer? Sue J&J. Did your phone burn the skin off your leg? Sue Samsung. People don’t like plaintiff lawyers, but lawsuits are a big part of the reason that more products don’t give you cancer or scald you. Nobody can successfully sue social media platforms, however, because of a 28-year-old law, known as Section 230, which gives them blanket protection against litigation.

I’ve written about the need to limit Section 230 before, and whenever I do, a zombie apocalypse of free-speech absolutists is unleashed. The proposition remains unchanged, however: If social media platforms believe they’ve done everything reasonable to protect children from the dangers of their product, then let them prove it in court. Or, better yet, let the fear of tobacco/asbestos-shaped litigation gorging on their profits motivate them to age-gate their products.

Finally, the government can go after companies whose products harm consumers. The Federal Trade Commission has fined Meta $5 billion over privacy violations to no apparent effect. This was perfect, except it was missing a 0. For these firms, $5 billion is a nuisance, not a deterrent. There’s a bill in the Senate right now, the Kids Online Safety Act, which would give the FTC new authority to go after platforms which fail to build guardrails for kids. It’s not without risk — some right-wing groups are supporting it because they believe it can be used to suppress LGBT content or anything else the patriarchy deems undesirable.2 But I have more faith in Congress’s ability to refine a law than I do in the social platforms’ willingness to change without one.

Until we change the incentives and put the costs of these platforms where they belong, on the platforms themselves, they will not change. Legislators trying to design age-gating systems or craft detailed policies for platforms are playing a fool’s game. The social media companies can just shoot holes in every piece of legislation, fund endless lawsuits, and deploy their armies of lobbyists and faux heat shields (Lean In), all the while making their systems ever more addictive and exploitative.

Or maybe we have it wrong, and we should let our kids drink, drive, and join the military at 12. After slitting their wrists, survivors often get tattoos to cover the scars. Maybe teens should skip social media and just get tattoos.

This essay was originally published in Scott Galloway’s No Mercy / No Malice newsletter. Subscribe here

We are both on the advisory board of Richard Reeve’s new American Institute for Boys and Men.

Note from Zach Rausch: Note that the risk that some groups may use KOSA as a means to censor content they don’t like has been addressed in the amended version of the bill. The bill does nothing to limit teens’ abilities to say whatever they want or to search for whatever they want. The provision that people are concerned about concerns what the companies do with their algorithms to recommend content to specific minors. The amendment added this provision to the bill, Section 2, regarding the Duty of Care: “Nothing in this subsection shall be construed to require a covered platform to prevent or preclude any minor from deliberately and independently searching for, or specifically requesting content; or the covered platform or individuals on the platform from providing resources for the prevention or mitigation of suicidal behaviors, substance use, and other harms, including evidence-informed information and clinical resources.“ For more, read our Atlantic essay, “Social Media Companies’ Worst Argument.” (un-paywalled version)

Our problem with limiting addictive things online (whether it's porn or gambling of social media) has never been technical but political.

I helped develop the first generation of Internet apps in Silicon Valley in the late 90's. Interactive websites were all custom written for specific clients. We (the programmers) were already strategizing ways to control online porn in 1997, and we came up with lots of them. The geeks (who consume a lot of porn but often wish they didn't) have known how to do it since the beginning. But the C-suite men (who also consume a lot of porn but care about money more than virtue) weren't interested. And the few C-suite women had convinced themselves that porn was female empowerment.

10 years later, I was out of the business, but still socially involved. We (the geeks) could all see the problems with Facebook from the beginning, although I don't know anyone who realized how addictive it would be (comparable to pornography, essentially online heroin.) But compared to limiting porn, limiting social media isn't even "low hanging fruit". It's fruit sitting on the ground!

Bottom line, from a long-time programming geek, I second that age-gating and limiting access to adults is absolutely technically doable. And it's so easy that anyone who says otherwise isn't an idiot but a liar. The only thing standing in the way is, and always has been, political will and John Stuart Mill's ghost.

As a parent, I can attest to the difficulty in constantly monitoring and attempting to figure out how to limit my children’s access to the internet and specific content. It’s hard, and complicated! I’m often jealous of my parents because when I was being raised, society absorbed some of that burden by regulating what was on TV, the radio, and in the movies. You had to be pretty stealthy if you wanted to sneak into an R rated movie, let alone if you wanted to see pornography. Now, kids can just accidentally stumble upon porn that would make even the most sexually progressive amongst us blush. I still support parental responsibility, I’m just saying a little help from society in the realm of the internet would be nice.