How Is Your New School Phone Policy Working?

Introducing the TAPS: Toolkit for Assessing Phones in Schools

TL;DR Quick Summary

What is the TAPS? A free, research-informed toolkit designed to help elementary, middle and high schools evaluate their phone policy. Built for schools, usable by states, customizable to your local needs. You can learn more here, and choose to partner with The Stanford Social Media Lab to evaluate your policy.

Across the Western world, academic performance is falling. The gap between the highest and lowest performers is widening. Adolescent mental illness has reached historic highs. And in schools across the country, teachers and school leaders are reporting widespread struggles with attention, motivation, and focus in the classroom.

In response, a growing number of states and school districts have begun to target one likely contributor: the widespread use of smartphones and social media during the school day. Since 2023, thirty-seven U.S. states have adopted new statewide policies aimed at reducing phone use in schools (18 states plus Washington DC are full bell to bell policies). Entire countries — including Brazil, Finland, and Greece — have followed suit. These policies vary widely in strength, design, and enforcement, ranging from “bell to bell” to more limited, classroom-only restrictions.

But as the momentum for change builds, some key questions remain: To what extent do bell-to-bell phone policies actually work to improve student attention, performance, friendships, and mental health? In what ways? For whom, and under what conditions? And how should we define success?

Several promising research efforts are beginning to answer these questions. Phones in Focus, led by Angela Duckworth, Hunt Allcott, and Matthew Gentzkow, is mapping phone policies across U.S. schools. Researchers at UNC Chapel Hill, in collaboration with the Phone-Free Schools Movement, are working together to evaluate the efficacy of a new statewide phone policy in North Carolina.

These projects offer timely, important findings to the academic community, and we encourage educators and policymakers to explore them. But until now, schools have lacked a clear, accessible tool to evaluate the impact of their own phone policies. Legislators, too, have lacked a ready-to-use survey they can offer to schools in their jurisdictions to assess whether these policies are making a difference. Without measurement, schools and policy makers won’t know what’s working or what needs to change. A policy may be put in place and remain untouched, even if it is falling short. States and districts won’t have the information they need to recommend improvements or scale successful approaches.

To fill this gap, our team at the Tech and Society Lab at NYU Stern in partnership with the Stanford Social Media Lab, has developed the Toolkit for Assessing Phones in Schools (TAPS).

This post introduces the toolkit and how it works. We hope that this toolkit will support schools across the globe to begin assessing what’s working, what’s not, and how to make informed, evidence-based decisions going forward.

“Yes! I want to use it right now. I think it will give us the concrete data we need.”

— School Administrator reviewing the TAPS

What is the TAPS?

The Toolkit for Assessing Phones in Schools (TAPS) is a free, accessible, and standardized set of surveys designed to help elementary, middle, and high schools evaluate the impact of new phone policies. It was developed between February and August 2025, through multiple rounds of feedback from academic researchers, teachers, undergraduate research assistants, and administrators from across the country. A detailed account of the development process is available in the TAPS: Methodology.

At the heart of TAPS are four surveys — one each for students, teachers, parents, and administrators — tailored for use in elementary (K–5), middle school (grades 5–8), and high school (grades 9–12). The student and teacher surveys are available in both short and long formats, allowing schools to choose the version that best fits their needs. There is only one single-length version for the admins and parents.

Ideally, schools will administer the surveys both before and after a new phone policy is implemented. This pre/post design enables educators to track meaningful changes over time and assess whether the policy is working as intended.

How Does It Work?

1. What Do the Surveys Measure?

Each TAPS survey is structured around a set of core outcomes related to school life and student well-being that are likely to be affected by phone use and policy changes. Some constructs are shared across most surveys: school experience, tech use, perceptions of the policy itself. Others are only asked of some groups.

Students, for example, can speak to changes in their attention, sleep, and social life. Teachers are uniquely positioned to assess classroom dynamics. Administrators can provide school-wide data. And parents can offer insight into what’s happening at home.

Table 1. Key Constructs Measured by Each Survey

(Selected examples below; full construct list available in the TAPS: User Guide.)

2. Sample Questions

Each survey includes a mix of quantitative and open-ended items. Below are a few examples:

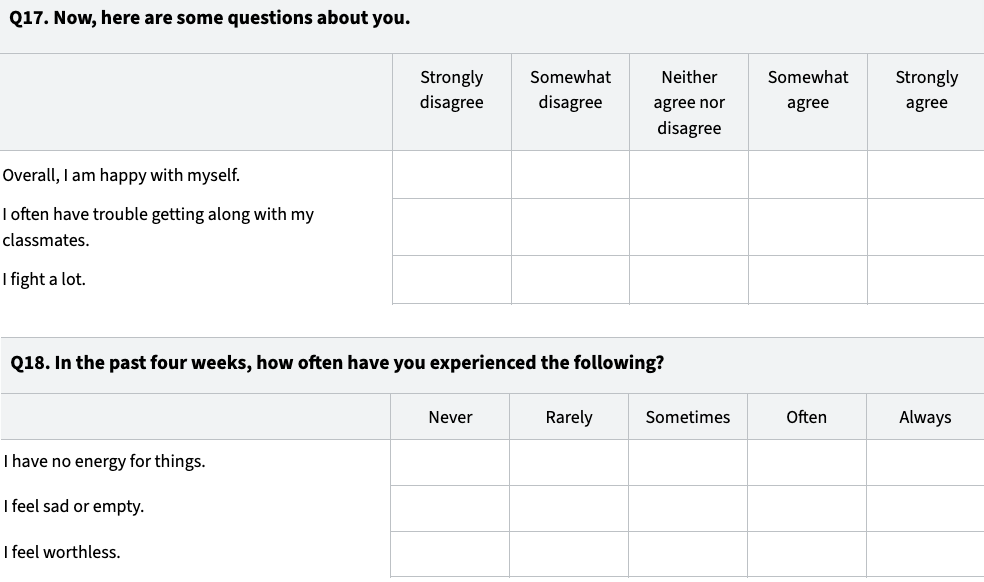

Mental Health Questions from Student Short Survey:

From the Administrator Survey (School-wide indicators):

For this school year, what is the Average Daily Attendance (ADA) percentage at this school?

For this school year, what is this school’s suspension rate?

How many reported bullying incidents have there been this school year?

How many reported cyberbullying incidents have there been this school year?

3. Short and Long Survey Versions

The student and teacher short versions of the TAPS surveys take about 5–8 minutes to complete and focus on the most critical constructs. They’re designed to be easy to administer and interpret.

The student and teacher long versions, which take 15–20 minutes, offer a deeper dive. These versions draw directly from validated instruments in the scientific literature and provide a fuller picture across a broader range of constructs. All items included in the short-form versions are also found in the long-form.

Schools can choose whichever version best fits their goals, time constraints, and capacity.

4. Pre/Post Design

To understand whether phone policies are working, schools need more than a snapshot at a single point in time. Instead, they need a before and after.

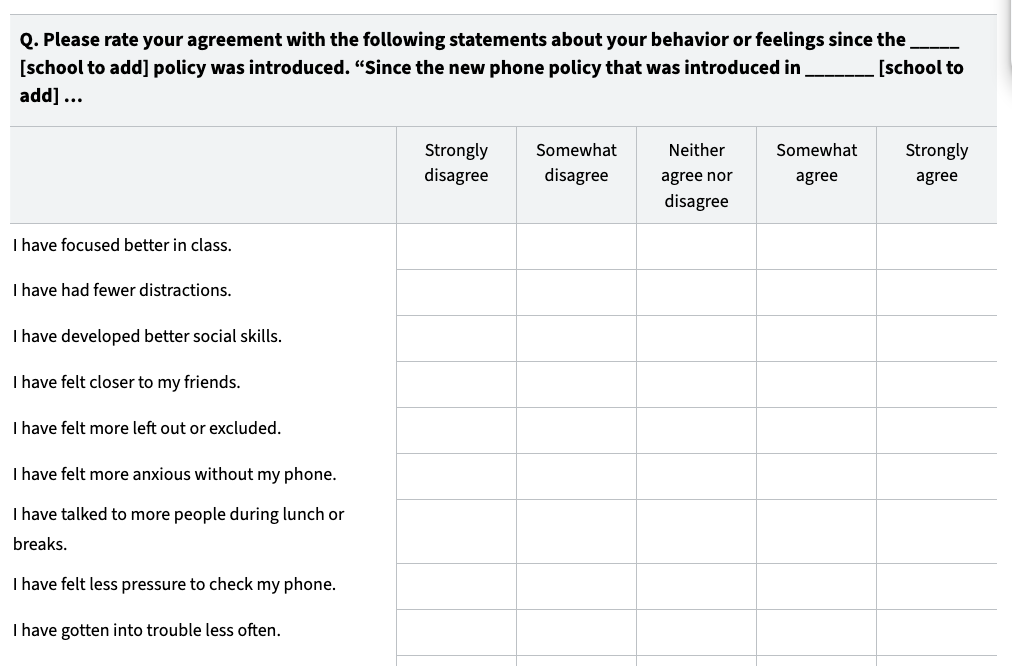

For schools that will be going phone free after January 1, 2026, we recommend schools should administer the survey three months into the school year, and then repeat the next year at a similar time (The TAPS User Guide offers more detailed implementation guidance.) We then recommend regular follow-ups in the follow-up years. Each survey also includes a set of optional questions recommended for schools to include in the year after the policy was introduced.

For schools that have already implemented a phone policy, it’s never too late to begin measurement (especially if you're planning to revise or update your phone policy). You can still administer the surveys to examine perceptions of the policy’s impact. If you are planning to change or strengthen your policy in any way, you can administer the surveys before and after those changes are made. This will allow you to track changes over time and make more informed decisions about future implementation.

5. Formats and Access

To make TAPS easy to use in a wide range of school settings, we’ve created two versions of every survey: one printable, one digital.

Google Docs versions can be used for schools that prefer paper surveys.

Google Form versions can be used for schools that prefer to collect responses digitally. You can simply make a copy of each Google Form and customize it for your school’s use. That way you own all the data. It is not shared with anyone, including us. You can then analyze it on your own.

Note: We will soon be launching a Qualtrics version which you can use in collaboration with the Stanford Social Media Lab

All materials are free and open-access. You can download individual surveys or full packs (short-form or long-form), and you’re welcome to revise the instructions to fit your local context.

How to Use the TAPS

The TAPS was built to be useful at both the individual school level and across larger school systems. The TAPS can support evaluations for a principal testing a policy in one building or a governor rolling out statewide reforms.

1. For Individual School Testing

If you’re a school leader, teacher, or counselor looking to evaluate a phone policy at your school, the TAPS: User Guide (Section 3) offers clear step-by-step instructions on how to implement the surveys, analyze results, and interpret what the data can (and can’t) tell you.

Even if you're not publishing findings or running a formal study, this kind of measurement can inform staff conversations, parent communication, and board decision-making. It can also help you determine whether you should continue the policy, refine the policy, or roll it back.

We’re also developing a Scoring Guide to help interpret survey results. And if you'd like additional support, you can reach out to the Stanford Social Media Lab for guidance.

Note: Schools retain full control over their own data. The TAPS team does not collect data unless explicitly invited to collaborate on a research project or evaluation.

2. For Multi-School Testing

If you are a governor, mayor, superintendent, or researcher working across multiple schools, we recommend reviewing the TAPS: User Guide (Section 4) — which includes a proposed research design for large-scale evaluation.

This version includes a proposed research design that enables stronger evidence through coordinated, large-scale evaluation. It includes high level instructions for design and implementation.

We encourage early partnerships with research teams — whether with the Stanford Lab or elsewhere — to ensure evaluation plans are rigorous and useful from the start.

“We were searching for something like this to help us start the implementation process.” — Teacher reviewing the TAPS

Cautions and Caveats

While TAPS is a valuable tool for assessing and tracking changes in the school environment, it is not designed to establish causality with high confidence. Like most observational tools, it cannot fully control for confounding variables without a randomized control trial. That said, the TAPS makes it easy for individual schools to track changes within their school, and for research teams to conduct a study in which you can compare the change scores, over time, between schools that changed their phone policy and schools that did not. Such studies can offer meaningful evidence to help schools decide whether to continue, adjust, or discontinue a policy based on observed outcomes and stakeholder feedback. For guidance on interpreting results and understanding the limits of causal claims, see the TAPS: User Guide.

In the coming weeks and months, we will be releasing additional resources for use including a comprehensive scoring guide, a library of relevant measures, and a Qualtrics version of the survey.

Try It Out

As schools, states, or countries are implementing new phone policies around the world, it’s essential that these policies be rigorously evaluated. Without measurement, we won’t know what works, what to change, or what to recommend going forward.

This toolkit is designed to help do exactly that. It gives schools a practical, research-informed way to assess the impact of their policies, and to make decisions that prioritize student well-being, attention, and learning.

Give it a try, and let us know how to improve it.

This is genius and generous providing educational institutions with an adaptable format! Congratulations on being change makers!

I like this idea!

As someone who works at a middle school... I think if you want this to be widely adopted by schools, you will need to make the process much more streamlined and straightforward.

I think I am unusually well-equipped to understand and implement a study like this, for a middle school teacher. I was enthusiastic about the idea of doing a study like this. Looking at the details of your implementation guide, however, I think doing analysis on this data looks like a whole lot of work for people who already have demanding full-time jobs.

I think if you can provide digital forms for a school to get different stakeholders to fill out, and then provide them with digestible results, that would be a realistic ask. Or possibly the test administrator agrees to meet twice with the Stanford researchers, once beforehand to discuss implementation and once afterwards to discuss results?

I say this with abundant sympathy as a person who is frequently in the position of trying to get other people to do interesting and useful things and being told that they are too complicated.