Why Some Researchers Think I’m Wrong About Social Media and Mental Illness

Six propositions for evaluating the evidence.

In the first eight posts of the After Babel substack, we have laid out the evidence that an epidemic of mental illness began around 2012, simultaneously in the USA, UK, Canada, Australia, and New Zealand. (Zach will show what happened in the Nordic countries on Wednesday [here is the link]).

The most controversial post among the eight is this one: Social Media is a Major Cause of the Mental Illness Epidemic in Teen Girls. Here’s the Evidence. In that post, I introduced the first of the many Collaborative Review Docs that I curate with Zach Rausch, Jean Twenge, and others. I summarized the four major categories of studies that bear on the question of social media use and teen mental illness: correlational studies, longitudinal studies, true experiments, and quasi (or natural) experiments.

I showed that the great majority of correlational studies pointed to statistically significant relationships between hours of use and measures of anxiety and depression. Furthermore, when you zoom in on girls, the relationships are not small: girls who spend more than 4 hours a day on social media have two to three times the rate of depression as girls who spend an hour or less. The common refrain “correlation does not prove causation” is certainly relevant here, but I showed that when you bring in the three other kinds of studies, the case for causation gets quite strong.

In the weeks since that post, four social scientists and statisticians have written essays arguing that I am wrong. They do not say that social media is harmless; rather, they argue that the evidence is not strong enough to support my claim that social media is harmful. I will call these critics the skeptics; here are their essays in the order that they were published:

A. Stuart Ritchie: Don’t panic about social media harming your child’s mental health – the evidence is weak. (at inews.co.uk)

B. The White Hatter: “Some” Are Misrepresenting CDC Report Findings Specific To The Use Of Social Media & Technology By Youth. (at The White Hatter blog)

C. Dylan Selterman: Why I'm Skeptical About the Link Between Social Media and Mental Health. (at Psychology Today)

D. Aaron Brown: The Statistically Flawed Evidence That Social Media Is Causing the Teen Mental Health Crisis (at Reason.com)

The skeptics believe that I am an alarmist. That word is defined at dictionary.com as “a person who tends to raise alarms, especially without sufficient reason, as by exaggerating dangers or prophesying calamities.”

I think I have a pretty good record of “prophesying.” Drawing on my research in moral psychology, I have warned about 1) the dangers that rising political polarization poses to American democracy (in 2008 and 2012), 2) the danger that moral and political homogeneity poses to the quality of research in social psychology (in 2011 and 2015) and to the academy more broadly (co-founding HeterodoxAcademy.org in 2015), and 3) the danger to Gen Z from the overprotection (or “coddling”) that adults have imposed on them since the 1990s, thereby making them more anxious and fragile (in 2015 and 2018, with Greg Lukianoff, and 2017 with Lenore Skenazy). Each of these problems has gotten much worse since I wrote about it, so I think I’ve rung some alarms that needed to be rung, and I don’t think I’ve rung any demonstrably false alarms yet.

I’ll therefore label myself and those on my side of the debate the alarm ringers. I credit Jean Twenge as the first person to ring the alarm in a major way, backed by data, in her 2017 Atlantic article titled Have Smartphones Destroyed a Generation? and in her 2017 book iGen.

So this is a good academic debate between well-intentioned participants. It is being carried out in a cordial way, in public, in long-form essays rather than on Twitter. The question for readers — and particularly parents, school administrators, and legislators — is which side you should listen to as you think about what policies to adopt or change.

How should you decide? Well, I hope you’ll first read my original post, followed by the skeptics’ posts, and then come back here to see my response to the skeptics. But that’s a lot of reading, so I have written my response below to be intelligible and useful to non-social scientists who are just picking up the story here.

In the rest of this essay, I lay out six propositions that I believe are true and that can guide us through the complexity of the current situation. They will illuminate how five social scientists can look at the same set of studies and reach opposing conclusions. By identifying six propositions, I hope I am advancing the specificity of the debate, inviting my critics to say which of the propositions is false, and inviting them to offer their own. To foreshadow my most important points:

The skeptics are demanding a standard of proof that is appropriate for a criminal trial but that is inappropriately high for a civil trial or a product safety decision.

The skeptics are mistaking the map for the territory, the datasets for reality.

Parents and policymakers should consider Pascal’s Wager: If you listen to the alarm ringers and we turn out to be wrong, the costs are minimal and reversible. But if you listen to the skeptics and they turn out to be wrong, the costs are much larger and harder to reverse.

Proposition 1: Something big is happening to teen mental health in many countries at the same time, and nobody has yet offered an alternative explanation that works internationally.

I have encountered no substantial criticism of my claim that an epidemic of mental illness (primarily anxiety and depression) began in multiple countries around the same time––the early 2010s. Selterman notes the relevant point that depression rates have been rising with some consistency since the mid-20th century, so this is not entirely new. But I believe that the velocity of the rise is unprecedented. The graphs are shocking and astonishingly similar across measures and countries. Right around 2012 or 2013, teen girls in many countries began reporting higher rates of depression and anxiety, and they began cutting and poisoning themselves in larger numbers. The numbers continued to rise, in most of those countries, throughout the 2010s, with very few reversals.

Here are the theories that have been offered so far that can explain why this would happen in the same way in many countries at roughly the same time:

1. The Smartphones and Social Media (SSM) Theory: 2012 was roughly when most teens in the USA had traded in their flip phones for smartphones, those smartphones got front-facing cameras (starting in 2010), and Facebook bought Instagram (in 2012) which sent its popularity and user base soaring. The elbow in so many graphs falls right around 2012 because that’s when the “phone-based childhood” really got going. Girls in large numbers began posting photographs of themselves for public commentary and comparison, and any teens who didn’t move their social lives onto their phones found themselves partially cut off, socially.

2. There is no other theory.

Many people have offered explanations for why 2012 might have been an elbow in the USA, such as the Sandy Hook school shooting and the increase in terrifying “lockdown drills” that followed, but none of these theories can explain why girls in so many other countries began getting depressed and anxious, and began to harm themselves, at the same time as American girls.

The White Hatter makes the important point that “youth mental health is more nuanced and multifactorial than just pointing to social media and cell phones as the primary culprit” for the rise in mental illness. I agree, and in future posts I’ll be exploring what I believe is the other major cause––the loss of free play and daily opportunities to develop antifragility. The White Hatter offers his own list of alternative factors that might be implicated in rising rates of mental illness, including: Increases in school shootings and mass violence since 2007; sexualized violence; increased rates of racism, xenophobia, homophobia, and misogyny; increased rates of child abuse; housing crisis; concerns about climate change; the current climate of political polarization, and many more. But again, these apply to the USA and some other countries, but not most others, or at least not all at the same time. The climate change hypothesis seems like it could explain why it was teen girls on the left whose mental health declined first and fastest, if they were the group most alarmed by climate change, but since when does a crisis that mobilizes young people cause them to get depressed? Historically, such events have energized activists and given them a strong sense of meaning, purpose, and connection. Plus, heightened concern about the changing climate began in the early 1990s and rose further after Al Gore’s 2006 documentary An Inconvenient Truth, but symptoms of depression among teens were fairly stable from 1991 to 2011

The only other candidate that is often mentioned as having had global reach is the Global Financial Crisis that began in 2008. But that doesn’t work, as Jean Twenge, I, and others have shown. Why would rates of mental illness be stable for the first few years of the crisis and then start rising only as the crisis was fading, stock markets were rising, and unemployment rates were falling (at least in the USA)? And why are rates still rising today?

If you can think of an alternative theory that fits the timing, international reach, and gendered nature of the epidemic as neatly as the SSM theory, please put it in the comments. Zach and I maintain a Google doc that collects such theories, along with studies that support or contradict each theory. While some of them may well be contributing to the changes in the USA, none so far can explain the relatively synchronous international timing.

Proposition 2: “The preponderance of the evidence” is the appropriate standard for civil trials and tort law about dangerous products, but the skeptics all demand “beyond a reasonable doubt.”

The skeptics are far more skeptical about each study, and about the totality of the studies, than the alarm ringers. Much of the content in Brown and Ritchie consists of criticisms of specific studies, and I think many of their concerns are justified. But what level of skepticism is right when addressing the overall question: is social media harming girls?

There are two levels often used in law, science, and life. Each is appropriate for a different task:

The highest level is “beyond a reasonable doubt.” This is the standard of proof needed in criminal cases because there is widespread agreement that a false positive (convicting an innocent person) is much worse than a false negative (acquitting a guilty person). It is also the standard editors and reviewers use when evaluating statistical evidence in papers submitted to scientific journals. We usually operationalize this level of skepticism as “p < .05” [pronounced “p less than point oh five”], which means (in the case of a simple experiment with two conditions): The probability (p) that this difference between the experimental and control conditions could have come about by chance is less than five out of 100.

The lower and more common level is “the preponderance of the evidence.” This is the standard of proof needed in civil cases because we are simply trying to decide: Is the plaintiff probably right, or probably wrong? The thousands of parents suing Meta and Snapchat over their children’s deaths and disabilities will not have to prove their case beyond a reasonable doubt; they just have to convince the jury that the odds are greater than 50% that Instagram or Facebook was responsible. We can operationalize this as “p > .5,” [“p greater than point five”], which means: the odds that the plaintiff is correct that the defendant has caused him or her some harm is better than 50/50. This is also the standard that ordinary people use for much of their decision-making.

Which standard are the skeptics using? Beyond a reasonable doubt. They won’t believe something just because it is probably true; they will only endorse a scientific claim if the evidence leaves little room for doubt. I’ll use Brown as an example, for he is the most skeptical. He demands clear evidence of very large effects before he’ll give his blessing:

Most of the studies cited by Haidt express their conclusions in odds ratios—the chance that a heavy social media user is depressed divided by the chance that a nonuser is depressed. I don't trust any area of research where the odds ratios are below 3. That's where you can't identify a statistically meaningful subset of subjects with three times the risk of otherwise similar subjects who differ only in social media use. I don't care about the statistical significance you find; I want clear evidence of a 3–1 effect. [Emphasis added.]

In other words, if multiple studies find that girls who become heavy users of social media have merely twice the risk of depression, anxiety, self-harm, or suicide, he doesn’t want to hear about it because it COULD conceivably be random noise.

Brown goes to great lengths to find reasons to doubt just about any study that social scientists could produce. For example, participants might not be truthful because:

Data security is usually poor, or believed to be poor, with dozens of faculty members, student assistants, and others having access to the raw data. Often papers are left around and files on insecure servers, and the research is all conducted within a fairly narrow community. As a result, prudent students avoid unusual disclosures.

This level of skepticism strikes me as unjustifiable and counterproductive: we should not trust any studies because students might not be telling the truth because they might be worried that the experimenters might be careless with the data files––data often derived from anonymous surveys. And, in fact, using this high level of skepticism, Brown is able to dismiss all of the hundreds of studies in my collaborative review doc: “Because these studies have failed to produce a single strong effect, social media likely isn't a major cause of teen depression.”

The standard of proof that parents, school administrators, and legislators should be using is the preponderance of the evidence. Given their responsibilities, a false negative (concluding that there is no threat when in fact there is one) is at least as bad as a false positive (concluding that there is a threat when in fact there is none). In fact, one might even argue that people charged with a duty of care for children should treat false negatives as more serious errors than false positives, although such a defensive mindset can quickly degenerate into the kind of overprotection that Selterman raises at the end of his critique, and that Greg Lukianoff and I wrote about in our chapter in The Coddling on paranoid parenting.

Proposition 3: The map is not the territory. The dataset is not reality.

When Rene Magritte wrote “This is not a pipe” below a painting of a pipe, he was playfully reminding us that the two-dimensional image is not an actual pipe. He titled the painting “The Treachery of Images.”

Figure 1. Renee Magritte, The Treachery of Images, 1929.

Similarly, when the Polish-American philosopher Alfred Korzybski said “The map is not the territory,” he was reminding us that in science, we make simple abstract models to help us understand complex things, but then we sometimes forget we’ve done the simplification, and we treat the model as if it was reality. This is a mistake that I think many skeptics make when they discuss the small amount of variance in mental illness that social media can explain.

Here’s the rest of a quote from Brown that I showed earlier:

Because these studies have failed to produce a single strong effect, social media likely isn't a major cause of teen depression. A strong result might explain at least 10 percent or 20 percent of the variation in depression rates by difference in social media use, but the cited studies typically claim to explain 1 percent or 2 percent or less. These levels of correlations can always be found even among totally unrelated variables in observational social science studies.

Here we get to the fundamental reason why many of the skeptics are skeptical: the effect sizes often seem to them too small to explain an epidemic. For example, the correlations found in large studies between digital media use and depression/anxiety are usually below r = .10. The letter “r” refers to the Pearson product-moment correlation, a widely used measure of the degree to which two variables move together. Let me explain what that means.

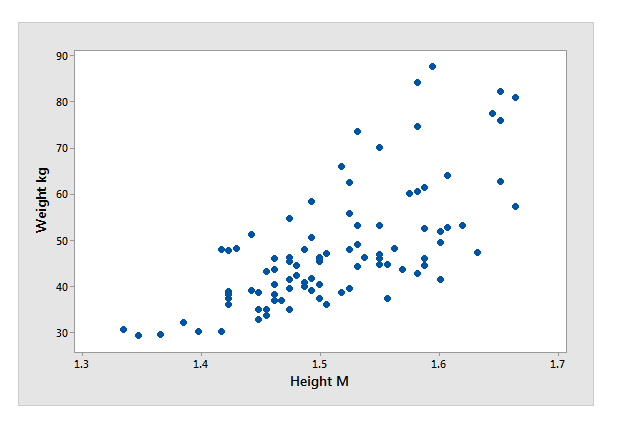

Statistician Jim Frost has a helpful post explaining what correlation means by showing how the height and weight of girls is correlated. He writes: ”The scatterplot below displays the height and weight of pre-teenage girls. Each dot on the graph represents an individual girl and her combination of height and weight. These data are actual data that I collected during an experiment.”

Figure 2. From Interpreting Correlation Coefficients, by statistician Jim Frost.

You can see that as height increases along the X-axis, weight increases along the Y-axis, but it’s far from a perfect correlation. A few tall girls weigh less than some shorter girls, although none weigh less than the shortest. In fact, the correlation shown in Figure 2 is r = .694. To what extent does variation in height explain variation in weight? If you square the correlation coefficient, it tells you the proportion of variance accounted for. (That’s a hard concept to convey intuitively, but you don’t need to understand it for this post). If we square .694 and multiply it by 100 to make it a percentage, we get 48.16%. This means that knowing the height of the girls in this particular dataset explains just under half of the variation in weight, in this particular dataset.

Is that a lot or a little? It depends on what world you are in. In a world where you can measure physical things with perfect accuracy using tape measures and scales, it’s pretty good, although it tells you that there is a lot more going on that you haven’t captured just by knowing a girl’s height.

But it is amazingly high in the social sciences, where we can’t measure things with perfect accuracy. It is so high that we rarely see such correlations (except when studying identical twins, whose personality traits often correlate above r = .60). Let’s look at the social media studies in section 1 of the Collaborative Review doc. Most of the studies ask teenagers dozens or hundreds of questions about their lives including, typically, a single item about social media use (e.g., “how many hours a day do you spend, on a typical day, using social media platforms?”). They also typically include one item––or sometimes a scale composed of a few related questions––that ask the teen to assess her own level of anxiety or depression.

The first question is very hard to answer. (Try it yourself: how many hours a day do you spend on email plus texting?). Even using the screen time function on a phone doesn’t give you the true answer because people use multiple devices and they multitask. And even if we could measure it perfectly, “hours per day” is not really what we want to know; we want to know exactly what girls are doing and what they are seeing, but only the platforms know that, and they won't tell us. So we researchers are left to work with crude proxy questions. We just don’t have tape measures in the social sciences, and this places an upper bound on how much variance we can explain.

Suppose that Mr. Frost didn’t have any tape measures or weight scales, so he asked one research assistant to estimate the height of each girl in the study while standing 30 yards away, and he asked a different research assistant to estimate the weight of each girl, standing close by but wearing someone else’s prescription glasses. What would the correlations be? I don’t know, but I know they’d be much lower. They’d probably be in the ballpark of most correlations in personality and social psychology, namely, somewhere between r = .10 and r = .50.

Suppose it was r = .20. If we square .20, we get 0.04, or 4% of the variance explained. Would that mean that knowing someone’s height explains only 4% of the variance in weight in the real world? NO, because the map is not the territory, and the dataset is not reality. It’s just 4% in that dataset, which is a simplified model of the world. So when Brown insists that correlations must explain at least 10% of the variance, he is saying “show me r > .32 or I’m not listening.” He is acting as though the variance in mental health explained by social media use in the dataset is the same as the variance in mental health explained by social media in the real world.

Brown (and also Ritchie) is right that correlational studies are just not that useful when trying to figure out what caused what. Experiments are much more valuable. But correlational studies are a first step, telling us what goes with what in the available datasets. To set a minimum floor of r > .32 in the datasets when what you want is r > .32 in the world is, I believe, an error, one that is likely to lead to many false negatives.1

Proposition 4: The two sides actually agree that the key correlation in the datasets is around r = .15 or higher.

Ritchie wrote: “I’m not going to discuss the correlational studies: the ones that say that social media or smartphone use is correlated positively or negatively with mental health problems. That’s a debate that’s been had over and over again, with scientists disagreeing over the size of the correlation.”

That was true before 2020. But since 2020, there has been an unexpected convergence between some of the major disputants that the key correlation across datasets is actually somewhere between r = .15 and r = .20.

Some confusion has come about because most of the available correlational studies have focused not on social media use but on “digital media use” or “screen time,” which includes any screen-based activity, including watching Netflix videos or playing video games with a friend. These are not particularly harmful activities, so including them reduces whatever correlations are ultimately found between screen time and depression or anxiety. The correlations are usually r < .10. These are the small correlations that the skeptics point to. Moreover, most of these studies also merge boys and girls together; they rarely report the correlations separately for each sex.

In contrast, the alarm ringers are focused on the hypothesis that social media is particularly harmful to girls.

You can see this confusion in the most important paper in the field: a study of three large datasets in the USA and UK conducted by Amy Orben and Andrew Przybylski and published in 2019. I described this study in detail in my Causality post. The important thing here is that the authors claim, as the skeptics do, that the correlation between hours spent on digital media and variables related to well-being is so tiny that it is essentially zero. They report that it is roughly the same size as the correlation (in the datasets) of mental health with “eating potatoes” or “wearing eyeglasses.”

But note that those claims were about digital media, for boys and girls combined. When you look at what the article reported for social media only, the numbers are several times larger. Yet, many news outlets erroneously reported that social media was correlated at the level of potatoes. Furthermore, Orben and Przybylski didn’t report results separately for boys and girls, and the correlation is almost always higher for girls.

Jean Twenge and I wanted to test the SSM theory for ourselves, so we re-ran Orben and Przybylski’s code on the same datasets, limiting our analysis to social media and girls (and a few other changes to more directly test the hypothesis2). We found relationships equivalent to correlation coefficients of roughly r = .20.

Do we disagree with Orben and Przybylski on the size of the correlation? Surprisingly, no. In 2020 Amy Orben published a “narrative review” of many other reviews of the academic literature. She concluded that “the associations between social media use and well-being therefore range from about r = − 0.15 to r = − 0.10.” [Ignore the negative signs.3] So if Orben herself says the underlying correlation (across datasets, not in the real world) is between .10 and .15 for both sexes merged, and if we all agree that the relationship is tighter for girls than for boys, then we’re pretty close to a consensus that the range for girls rises up above r = .15.

Jeff Hancock of Stanford University is another major researcher in this field with whom I have had a friendly disagreement over the state of the evidence. He and his team posted a meta-analysis in 2022 which focused only on social media. They analyzed 226 studies published between 2006 and 2018. The studies were mostly of young adults, not teens, and, because many were done before Instagram was popular, Facebook was the main platform used. The headline finding, in the abstract, is that “social media use is not associated with a combined measure of well-being.” And yet here too, when you zoom in on depression and anxiety, rather than measures of happiness or positive health, they find the same values as Orben for both sexes merged: they report “small positive associations with anxiety (r = .13, p < .01)… and depression (r = .12, p < .01 …).” [see the abstract, and p. 30]. Moreover, they note that the correlations were even larger for adolescents than for young adults (p. 32), so that puts us somewhere above r = .13. They do not mention sex or gender in the report, but since the links are always tighter for girls, that puts us, once again, above r = .15 in these datasets (not in the world).

This is not small potatoes. When Jean Twenge and I went digging through the datasets used by Orben and Przybylski to find the right comparison for r = .15 we found that it’s not eating potatoes or wearing eyeglasses; it is binge drinking and using marijuana. If you want to dig deeper into what correlations like this can do, see this post by Chris Said on how Small correlations can drive large increases in teen depression. He shows that a correlation of r = .17 could account for a 50% increase in the number of depressed girls in a population.

Proposition 5: When researchers turn a question into variables they can measure, they often focus on one causal model and lose sight of others

Proposition 3 said that the dataset is not reality in part because it is built using only rough approximations of reality. But there is a far more important reason why the dataset is not reality: there are many potential causal pathways, but when researchers choose a simplified model of the world and a set of variables to test that model, it generally focuses them on one or a few causal paths and obscures many others.

For example, how does social media get under the skin? How does it actually harm teens, if indeed it does? The causal model that underlies the great majority of research is called the “dose-response” model: we treat social media as if it were a chemical substance, like aspirin or alcohol, and we measure the mental health outcomes from people who consume different doses of it. When we use this model, it guides us to ask questions such as: Is a little bit of it bad for you? How much is too much? What kinds of people are most sensitive to it?

Once we’ve decided upon a causal model we want to test, we then choose the variables we can obtain to test the theory. This is called operationalization, which is “a process of defining the measurement of a phenomenon which is not directly measurable, though its existence is inferred from other phenomena.” Since we can’t measure the thing directly and we have to measure something, we make up proxy questions like “How many hours a day do you spend, on a typical day, using social media platforms?”

But here’s the problem. Once the data comes pouring in from dozens of studies and tens of thousands of respondents, social scientists immerse themselves in the datasets, critique each others' methods, and forget about the many causal models for which we have no good data. In social media research, we focus on “how much social media did a person consume?” and we plan our experiments accordingly. Most of the true experiments in the Collaborative Review Doc manipulate the dosage and look for a change in mental health minutes later, or days later, or weeks later. Most don’t even distinguish between platforms, as if Instagram, Facebook, Snapchat, and TikTok are just different kinds of liquor.

Selterman is aware of this problem, and he said that the field needs more precise causal models:

There’s a missing cognitive link. We still don’t know what exactly about social media would make people feel distressed. Is it social comparison? Sedentary lifestyle? Sleep disruption? Physical isolation? There’s no consensus on this. And simply pointing to generic “screen time” doesn’t help clarify things.

I fully agree. The transition from play-based childhood to phone-based childhood has changed almost every aspect of childhood and adolescence in some way. In fact, I recently renamed the book I’m writing from “Kids In Space” (which refers to a complicated metaphor that I should not have wedged into the title) to The Anxious Generation: How the great rewiring of childhood is causing an epidemic of mental illness.

Here are just two of the causal models I examine in the book:

A) The sensitive period model

Adolescence is a time of rapid brain rewiring, second only to the first few years of life. As Laurence Steinberg puts it in his textbook on adolescence:

Heightened susceptibility to stress in adolescence is a specific example of the fact that puberty makes the brain more malleable, or “plastic”. This makes adolescence both a time of risk (because the brain’s plasticity increases the chances that exposure to a stressful experience will cause harm) but also a window of opportunity for advancing adolescents’ health and well-being (because the same brain plasticity makes adolescence a time when interventions to improve mental health may be more effective).

Suppose, hypothetically, that social media was not at all harmful, with one exception: 100% of girls who became addicted to Instagram for at least six months during their first two years of puberty underwent permanent brain changes that set them up for anxiety and depression for the rest of their lives. In that case, nearly all studies based on the dose-response model would yield correlations of r = 0 since they mostly use high school or college samples. In the few studies that included middle school girls, the high correlation from the Instagram-addicted girls would get diluted by everyone else’s data and the final correlation might end up around .1 or .2. And yet, if it was, say, 30% of the girls who fell into that category at some point, then Instagram use by preteen girls could––in theory––explain 100% of the huge increase in depression and anxiety that began in all the Anglosphere nations around 2013.

B) The Loss-of-IRL model

The transition to phone-based childhood occurred rapidly in the early 2010s as teens traded in their flip phones for smartphones. This “great rewiring of childhood” changed everything for teens, even those who never used social media, and even for those who kept using flip phones. Suppose, hypothetically, that the rapid loss of IRL (in real life) socializing caused 100% of the mental health damage. Kids no longer spent much time in person with each other; everything had to go through the phone, mediated by a few apps such as Snapchat and Instagram, and these asynchronous performative interactions were no substitute for hanging out with a friend and talking. If you went to the mall or a park or any other public place, no other teens would be there.

What would we find if we confined ourselves to the dose-response model? In the Loss of IRL model, social media is not like a poison that only kills those who take a lethal dose. It’s more like a bulldozer that came in and leveled all the environments teens needed to foster healthy social development, leaving them to mature alone in their bedrooms. So once again, the correlation in a dose-response dataset collected in 2019 could yield r = 0.0, and yet 100% of the increase in teen mental illness in the real world could (theoretically) be explained by the rewiring of childhood caused by the arrival of smartphones and social media (the SSM theory).

These are just two of many causal models that I believe are more important than the dose-response model. So the next time you hear a skeptic say that the studies can only explain 1 or 2 percent of the variance and, therefore, social media is not harmful, ask whether the skeptic has considered every causal model or just the dose-response one. (The White Hatter does discuss the sensitive period model.)

Proposition 6: Pascal’s Wager: You should calculate the risk of listening to each side.

Philosopher and mathematician Blaise Pascal famously calculated that it’s worth believing in God even if you think there is only a remote chance that God exists because if God does not exist, then the harm to you from living in a Godly way unnecessarily is minimal, and in any case, you only have to keep it up for a few decades. But if God does exist and if the decision about whether you’ll spend eternity blissed out in heaven or roasting in hell depends on whether you believed in God during your lifetime, well, you do the math.

Let’s try that for the debate between the alarm ringers and the skeptics. I still haven’t worked out exactly what measures I’m going to recommend in my book, but three of the most extreme measures are likely to be:

A) Encourage a norm that parents should not give their children smartphones until high school. Flip phones, Gabb or Pinwheel phones, and Light phones are ideal as first phones for middle school students. You can see a list of smartphone alternatives here, at Wait Until 8th. Such phones are all about communication, which is good. (I support Wait Until 8th; I just think it should be Wait Until 9th.)

B) Encourage all K-12 schools to be phone-free during the school day. Students should put their phones into a phone locker near the entrance when they arrive, or store them in a locked pouch which they can open at the end of the day. (I will have an entire post on this in a few weeks, presenting research on how phones in students’ pockets disrupt their focus, learning, and socializing.)

C) Congress should raise the age of “internet adulthood” from the current 13 (which was set in 1998 before we knew what the internet would become) to 16, and enforce it by mandating that the platforms use age verification procedures, a variety of which already exist.4

What damage is done if these measures are enacted and it later turns out that I and the other alarm ringers are wrong? Does anyone think that teen girls will become more anxious if they can no longer open an Instagram account at age 10? Does anyone think that children will learn less if they can’t text their friends during class? I should also point out that my three recommendations cost pretty close to zero dollars (just the cost of phone lockers). Furthermore, my second recommendation can be tested experimentally beginning this fall if we can get states or school districts to randomly assign some middle schools to go phone free and some to maintain their current policies. (I’ll say more about such experiments in a future post on phone-free schools.)

But if nothing is done and it later turns out that the alarm-ringers were right, then the trends that Zach and I have illustrated throughout this Substack will keep getting worse, drawing year after year of pre-teens into the suffering currently consuming their older siblings.

Conclusion

It has been six years since Jean Twenge first rang the alarm in her Atlantic essay Have Smartphones Destroyed a Generation? In those six years, the platforms have done little to reduce underage use, and the federal government has done nothing at all to mandate change to a consumer product that seems, from the preponderance of the evidence, to be harming millions of teens. The age at which children get their first smartphone continues to drop, and rates of mental illness continue to rise. We are moving backward. It’s time for a national and even global discussion about whether smartphones and social media interfere with healthy adolescent development.

Society needs skeptics to raise counterarguments against alarm ringers and to point out weaknesses in our evidence. I am fortunate to have elicited such constructive critiques from four social scientists. Ultimately parents, educators, and legislators must examine the arguments on both sides and then weigh the costs and risks of action, and of inaction.

Addendum, May 31, 2023: Here are the published responses to this post:

Not Every Study on Teen Depression and Social Media Is Bad. Only Most of Them. By Aaron Brown.

See Gotz, Gosling, & Rentfrow (2021) on how small effects are “The Indispensable Foundation for a Cumulative Psychological Science.” See Primbs et al. (2022) for a response.

We had argued earlier that Orben and Przybylski had made 6 choices in their analysis that collectively reduced a substantial correlation down to nearly zero. When we re-ran their analysis, in addition to limiting our analysis to girls and social media, we also only added controls for demographic variables, as is typically done, rather than for some psychological variables that were correlated with poor mental health, and we used scale means rather than using individual scale items.

Orben’s statement includes negative signs because she chose to label the mental health outcome in positive terms, “well being,” rather than “depression and anxiety.”

Many people assume that the only way to verify ages is to require that everyone show a government-issued ID to a platform before being permitted to open an account. But there are already so many companies that have found innovative ways to verify age that they have their own trade association. Clear, which many of use at airports, already offers this service to speed up purchases of alcohol at sporting events. I list some methods and companies here. If age verification is mandated, many more techniques for rapid and reliable verification will quickly come to market.

I thought White Hatter's preferred explanation was unlikely. "There's been a rise in misogyny, racism, and homophobia". I came of age in the 80s and all I can say in response is: "huh?" All that stuff was way worse back then.

This seems like "every bad thing is caused by this thing that I don't like" reasoning.

Note: I am a technologist, so my work supports the possibility of social media through creating the basis for the hardware it runs on.

After raising 5 children, and how helping with 6 grandchildren, having grown up in a age with lots of freedom and access to nature, I take a principled view that is not based on data, just simple observation. Humans evolved in nature. Culture formed in nature. Now, culture has grown so large it has flipped, and nature is in culture.

This causes many problems, and the antidote is almost always to reverse the relationship on a regular basis. Humans need to be in nature. Humans need to interact face to face. Humans need to play with each other. When this need is satisfied, all the cultural overlays are checked, and there is little harm. But, cut all this out, isolate and live in totally in culture, especially a virtual one, and shit is gonna happen.

This implies to me, withholding social media from youth does not solve the basic problem. The problem manifests in other ways, such as no more recess, no more sports, no more outdoor play, and so forth. You must address the bigger problem of our relationship to nature and each other.